The virtual production tools you need from pre to post

In 2013, Gravity became one of the first popular projects to use virtual production on LED walls. In the decade since, the industry has gradually warmed to the technology, but it didn’t really take a giant leap until The Mandalorian.

At this stage, people are still figuring out new and exciting ways to solve problems. “You have to break the rules to do new things and that's what virtual production is all about. It's finding ways to solve things,” said Gary Adcock, a new technology advocate and executive director of FilmScape Chicago. Even if there’s a tech revolution happening in the field, there are commonalities in how many people are currently doing VP so we researched and broke down the basic tools.

Overall a lot of the elements of a virtual production are the same as a green screen production, but with more flexibility. Most of the equipment is also the same, but with the addition of a strong computer equipped with real-time physics rendering software such as Unreal Engine to power what is displayed on the LED wall.

Pre-Production

The first step is to break down the script to determine what shots will use the LED wall. For anything that would traditionally use a green screen, the wall could be used instead. This benefits the actors so they don’t have to rely purely on their imagination and markers.

The next step is pre-visualization. The director will work with the art and VFX departments to build assets. The assets will be created in a 3D modeling program such as Autodesk Maya, Cinema 4D, ZBrush, or Blender, and then put into the real-time engine to make virtual locations. These assets will be used for previs and during production on the LED wall. The virtual locations can be viewed on a traditional computer screen or even in full 3D in a VR headset.

Since the virtual locations and the real-time engine will be the same for previs and production, the results will look a lot closer to the final finished product than would be achieved from standard storyboarding or animatics.

Pre-production tools needed for virtual production:

-

3D modeling software

-

Physics rendering engine

-

VR headset or computer capable of rendering 3D environments

-

Optional: 3D artwork versioning control software

Production

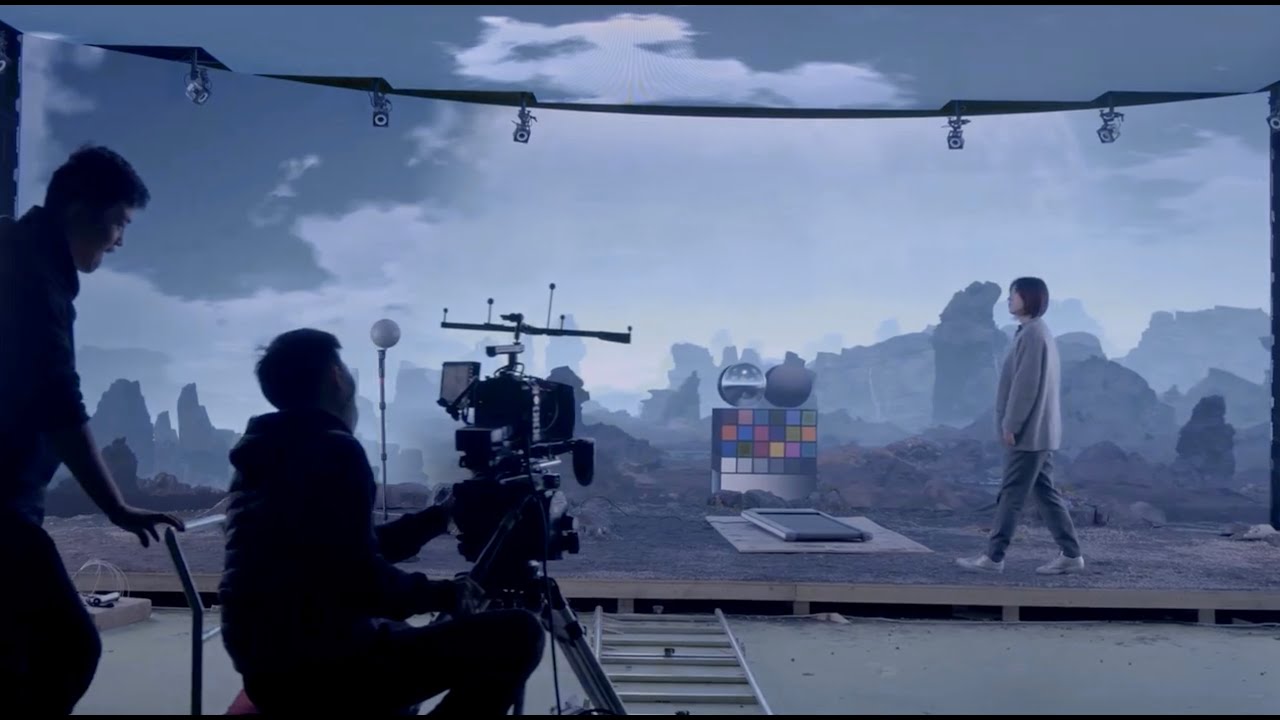

The biggest difference between a “normal” production and a virtual production is the giant LED wall that’s used as a background to the action. It’s visually striking to walk on set and see an actual “location” instead of a green screen.

A beefy computer, with a heavy-duty GPU, is needed to run the real-time engine that generates the graphics that go on the wall. It needs to be powerful because the LED wall is constantly updating the virtual scene in real time. There is often an LED wall processor connected between the rendering engine and the wall. It can do multi-screen processing, change the resolution format, switch signals, scale the image, and even improve image quality using algorithms.

A simple example of why real-time rendering is helpful would be a DMX networked light flicking on or off in the foreground of the scene. This light could be synchronized with the rendering engine so a light can go on or off in the rendered scene on the wall.

A more complicated and integral reason for the real-time engine is to give the scene parallax. To understand parallax, imagine a scene with a camera pointed at a tree with a dog hiding behind it. If the background is static no matter how far the camera is moved to the right, it’d be impossible to see the dog hiding behind the tree. But using a real-time engine moving the camera to the right would reveal the dog behind the tree. Moving the camera in this scenario will shift the entire virtual scene on the wall. It’s almost like moving the joystick in a 3D game that causes a first person POV character to peer around a corner. All of this requires physics rendering in real time, which the software engine and GPU accomplish.

To achieve parallax, a tracker is put on top of the camera, and sensors are set up that will be able to detect where the camera is in relation to the screen. There are two general types of camera trackers: Outside-In, where sensors around the room locate where the camera is at all times; and Inside-Out, where the camera has a sensor on it that continually monitors where the camera is at all times—typically by measuring its position relative to a constellation of little dots on the ceiling.

Having an LED wall helps many parts of production compared to a green screen since people can see how the actual shot will look. Framing and lighting can be done more accurately because instead of imagining the background location and the effects they will be displayed on the wall.

Production tools needed for virtual production:

-

LED wall or volume

-

PC with powerful GPU to run physics engine

-

LED wall processor(s)

-

Pixel mapping

-

Tracker and sensors for camera

Post-Production

The job of an editor can begin much earlier with virtual production. Throughout pre-production, VFX shots were likely edited together for previs, so during post-production, they can be replaced with the completed shots that contain the actors. In a traditional green screen shoot, editors would only receive shots of the actors with a green background.

With virtual production, post-production is more like a project that was shot on location than a project shot on a green screen, because typically, the vast majority of VFX decisions have already been completed. Overall the process is more iterative, with a lot of the work front-loaded into pre-production instead of post. As a result, everyone has a better understanding of their work as they’re doing it and should be able to refine it as they go.

Post-production tools needed for virtual production:

- There's no special toolkit required for post once your virtual production has been shot; just standard editing software

-1.png?width=2539&height=346&name=Transparent%20(2)-1.png)