12 key roles and teams, explained

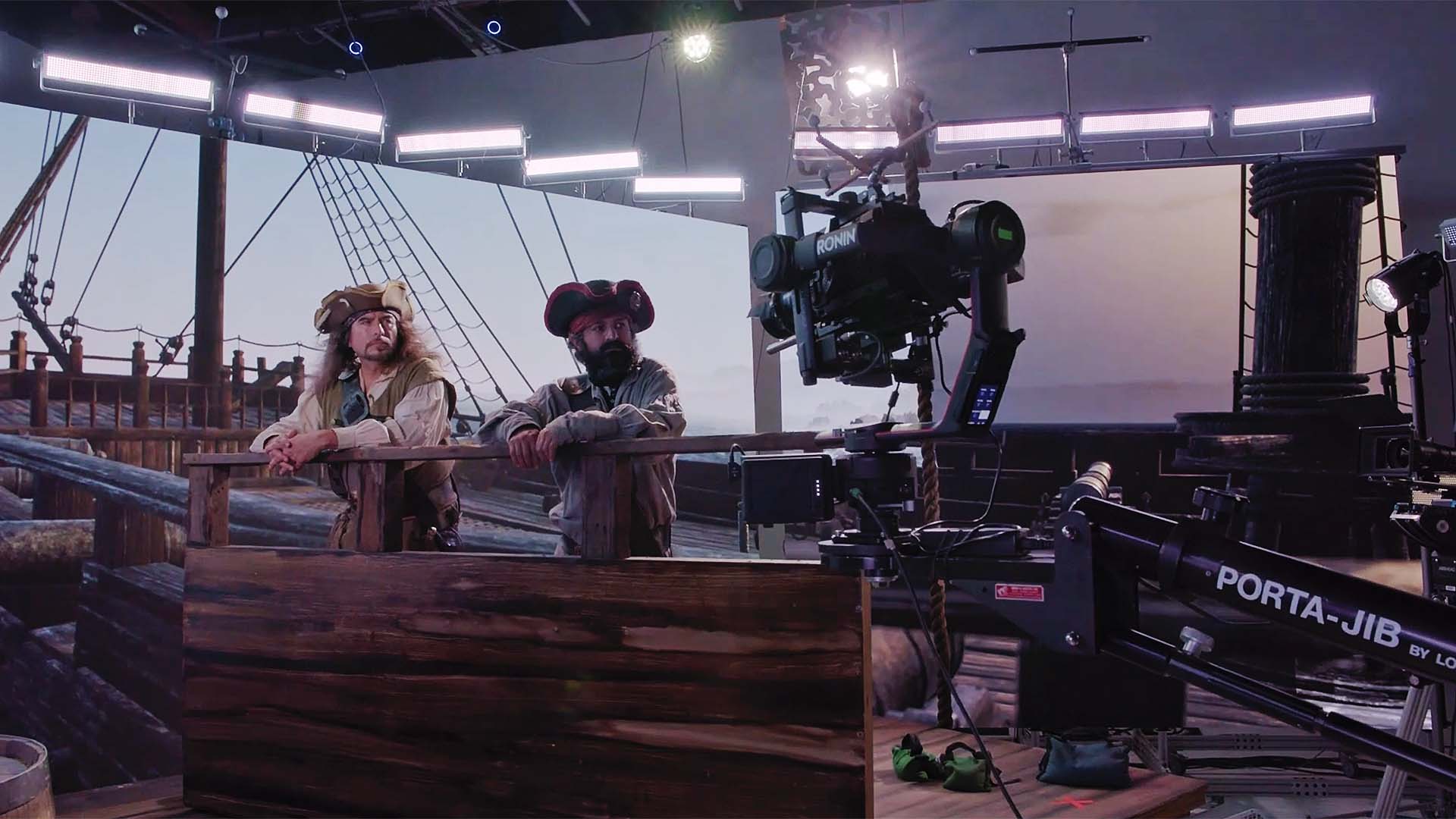

Filmmaking technology has constantly evolved over the last century, but virtual production using an LED wall is the kind of technological leap that very few people on set have dealt with before.

“It’s as revolutionary as taking a 35 millimeter still camera and shooting video with it. Twenty years ago that was unheard of,” said Gary Adcock, a new technology advocate and executive director of FilmScape Chicago.

What this seismic change means for producers and crew members is still being established.

“This technology is allowing certain genres, and filmmakers achieve something that previous budgets would not” said Jocelyn Hsu, co-founder and chief product officer of ARwall.

The more affordable virtual production technology gets, the more popular it will become. As a result, professionals will encounter new benefits, challenges, responsibilities, and tools. Here are the key players you need to run a virtual production along with what each role can expect.

Producer

A producer's job is to manage the entire project from start to finish, so they need a general understanding of every aspect of virtual production (VP).

One of the biggest changes for everyone is the workflow, which is non-linear for VP, but because producers manage the whole production they need to be aware of the differences for every crew member. More time and effort goes to pre-production, especially for building out assets and previs (pre-visualization).

There are advantages to VP that are huge for producers, mainly time and money savings along with less guesswork.

For example, according to Hsu, for the comedy series Our Flag Means Death, director “Taika Waititi said they were doing virtual production with an LED wall because otherwise, they wouldn't be able to afford to make."

Real-time rendering engines don’t need to pre-render, hence the name, which means it’s faster to make changes so lots of overall time savings. Productions can also save money because they don’t need to make separate assets for previs and for final images but producers should be aware that the models need to be optimized for real-time engines which is a specialized skill set.

Previs can be so extensive that it can be shown to test audiences and reworked as needed before going into production. This means there’s a much lower chance of needing to do costly reshoots and the overall results should be better as well.

VP revolutionizes the job of producers who are comfortable taking advantage of the benefits. A well-produced virtual production will have a lot less uncertainty going into production when compared to a green screen heavy project and may even come in under budget.

Director

Compared to green screen shoots, virtual production on an LED wall is a boon for directors overall. Today, if VFX aren’t finalized until post-production, it requires a lot of educated guesses that can cause budgets to balloon. However, since previs is so much closer to the final product, it’s easier for directors to collaborate. DPs, editors, virtual artists, and more can all weigh in before changes get costly.

What you see is what you get in Virtual Production.

Live VFX mean that directors can shoot more on instinct because they can see what’s going on in the scene instead of approximating the framing and lighting. This also helps actors because they don’t need to rely on their imaginations and markers. On a green screen shoot, directors have to spend a significant amount of time simply describing to the actor where they are in the scene and how everything will look.

Overall what you see is what you get in VP. This creates more chances for collaboration and creativity while having fewer chances of reshoots which means time can be allocated to other things.

Director of Photography

Virtual production can be a breath of fresh air for DPs who’ve been shooting mostly on green screens. It’s exciting to be able to shoot more than just actors on a green or blue background and it takes a lot of the guesswork out of lighting.

There are useful pre-production tools for DPs in real-time physics rendering engines. Traditional storyboards and previs animations are not super realistic and are siloed off from the rest of the production. They are also time-consuming to modify whereas previs made in Unreal Engine, using built-in features, allow DPs to add realistic lights, try out shots by moving around the camera, and change lenses. This architecture visualization shows how turning on and off lights in a scene looks or you can see the tools in Unreal Engine in this lighting tutorial. DPs can do virtual location scouting in VR headsets and are able to use hand controls to interact with the environment in a similar way to a physical shoot. These tools allow them to collaborate more easily in pre-production.

During production, they are able to light the actors to match with the VFX whereas with a green screen shoot the background lighting isn’t finalized until post. This means more time spent on prep, but it eliminates some of the most egregious green screen lighting issues.

Lighting can even be automated with a DMX networked light so shoots, “can move a lot faster with virtual production sets,” Hsu said. They can be synchronized with the rendering engine so that a physical light can be dimmed in real life which is matched automatically to the rendered scene on the wall. This might sound more complicated, but Hsu also said that the, “gaffer and the DP have more control. We connect the lights to the system to pull the information from the scene. It's almost making your job easier because you don't have to match those colors.”

DPs do need to watch out for certain things when working on a virtual production. The pixel pitch needs to be small enough that there isn’t moiré which gets worse the closer the camera is to the screen. Moiré is easy to see on a monitor so it’s fairly easy to avoid. It’s also important to watch out for hotspots reflecting off the LED wall.

A DP will likely have extra work in pre-production, but the benefits of VP are gigantic. Being able to see everything in the frame brings enjoyment and creativity to the job.

Virtual Art Department

The virtual art department is a go-between for the traditional art department and the previs department. The VAD builds out models that need to look good but also be optimized for real-time rendering. They have expanded responsibilities compared to a traditional art department because they need to make production-ready assets instead of passing off their work to the VFX department or set building.

Virtual Production Supervisor

Virtual production supervisors bridge the world between traditional styles of production and VP. They “interact with the rest of the crew to make sure they understand what's needed and communicate that on a higher level,” Hsu said. Part of their job is also to oversee the creation and use of real-time assets that will be used across departments in pre-production and production.

They’re also in charge of the physical tech on a virtual production shoot such as the LED wall as well as the creation and use of the virtual scout locations. In short, they manage everything that’s unique to virtual productions.

Having someone “who can connect the dots makes all the difference in the world. And that's what virtual production supervisors do. Guys like me, I don't necessarily drive every aspect of Unreal Engine or the camera, or everything else, but I know how it all interconnects and I know how it's supposed to work,” Adcock said.

Editor

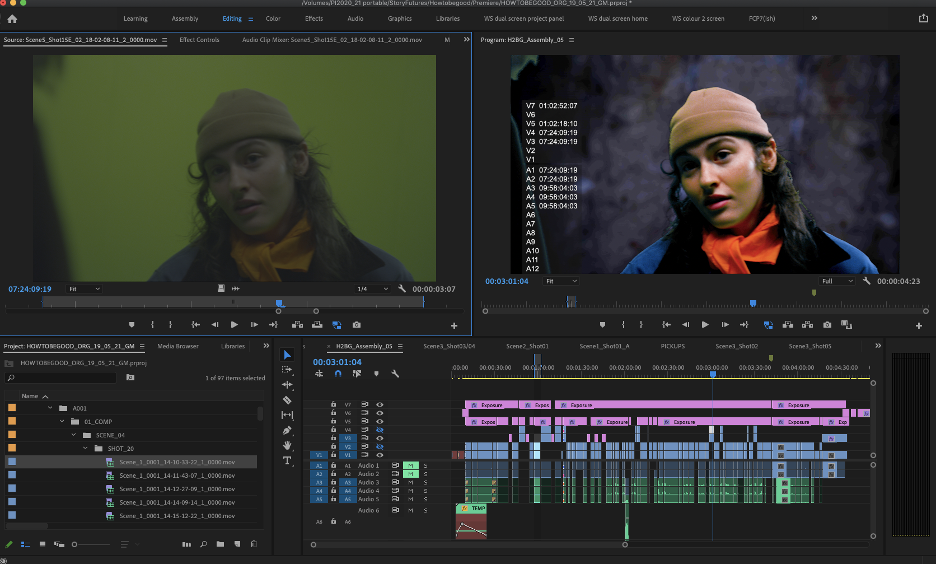

An editor's job begins much earlier with virtual production than in a traditional shoot. Throughout pre-production VFX shots are edited together for previs, so during post-production, they can be replaced with the completed shots that contain the actors. In a traditional green screen shoot, they would instead receive shots of the actors with a green background that still need to be composited.

The post-production process is more iterative for VP, with a lot of the work front-loaded into pre-production instead of post which gives editors the chance to collaborate more by making sure there aren’t blocking or story issues. This can give better results because it reduces delays and budget creep.

One potential negative of the new workflow is that editors traditionally come into post-production with fresh eyes when looking at the footage but with VP this is no longer the case because they do more work in pre-production. This means they need to try hard to maintain an objective view of the footage.

With virtual production, post-production is more similar to a project that was shot on location than a project shot on a green screen, because overall the vast majority of VFX have already been completed and are in the shots.

Previs Creator

Previs is traditionally strictly pre-production but with a real-time engine, those lines blur. The assets created for VP can now be used in production if they’re of high enough quality which obviously saves time because work isn’t duplicated.

Traditionally previs is set apart from the rest of the departments but with VP they become much more connected. Previs people might wind up working directly with the director, DP, and editor. The job of previs becomes more important and creative for the above reasons.

Stunt Coordinator

Real-time rendering engines are great for stunt coordinators because they can more quickly and accurately simulate stunts with accurate physics built-in.

Using Unreal Engine is safer because it’s easier to accurately test stunts before they’re done. Creative uses of foreground sets combined with LED walls make stunts safer because they’re in front of a screen instead of more dangerous locations or setups.

Stuntvis is similar to previs, but it’s a more specific focus. Stuntvis does not edit shots together like previs but instead, the emphasis is on the continuous action visualized in the 3D space. Stuntvis is all about trying out different action sequences with the different departments weighing in and modifying accordingly. Since everything is rendered in real-time, it’s relatively easy to make changes which gives more creative control.

Production Designer

Production design on a standard set is generally done with 3D programs, but it’s even easier with real-time rendering engines because of the speed and ease of use. Virtual scouting helps them visualize the set and sight lines before building begins which means less duplicated work. It also allows them to experiment and collaborate more with the other departments. Production designers work closely with the virtual art department to figure out what will be built out in the foreground vs. what will be rendered on the wall.

Virtual Imaging Technician

A virtual imaging/production technician “makes sure that all the hardware including the LED wall, and tracking hardware are working together and correctly,” Hsu said.

This is the person who sets up the modular wall and wires it together along with the LED processor (if one is being used). Depending on the shoot, there will be varying setups such as multiple LED walls at different angles or curved panels. They also set up the camera tracking sensors that are needed to achieve parallax.

Virtual Production Operator

A virtual production operator runs the scenes from the computer. “They are the game engine expert or the person who makes sure what you're seeing in the virtual scene looks correct, like if the animation or virtual camera movements are right and if the color matches what the DP and the gaffer team require,” Hsu said.

This position can be combined with the virtual imaging technician and the virtual production supervisor if you have a lower budget or if the regular crew already has some virtual production skills.

Visual Effects Supervisor

Working with an LED wall is a large change for a visual effects supervisor. It benefits everyone to be able to see the VFX in production, but it means that they need to be completed in pre-production instead of post-production.

A challenge when using a real-time rendering engine is that the models need to be built efficiently so that the frames per second don’t go down. If the wall is rendering at 60 fps the scene has to be much more optimized than traditional frame rendering which doesn’t have any requirements other than people's patience/budgeted time.

Powerful GPUs that have come out fairly recently, along with real-time rendering engines that were built for gaming, are the reasons that photorealistic images can be rendered this fast. Assets should be built using similar techniques to game asset development to ensure they are efficient enough to render at the proper frame rate.

Some traditional problems with green screens are solved with VP. A large benefit is that green screen spill isn’t a problem since it’s an LED wall and therefore won’t reflect green light. An LED wall can even be made green to pick up some green screen type shots without having spill. Another VP bonus is that green screens tend to look worse the tighter the shot whereas LED walls look great for close-ups.

It’s much easier for visual effects supervisors to work and collaborate because it’s a case of what you see is what you get. The more abstract a visualization is, the more communication is necessary for everyone to understand what’s going on.

-1.png?width=2539&height=346&name=Transparent%20(2)-1.png)