Hollywood and Madison Avenue have been putting actors in front of fake backgrounds since Hitchcock was a teenager. The key word in that sentence? Fake. Because 100 years later, filmmakers are still wrestling with the same challenge in virtual production.

Since films and series started using LED walls and video game engines to bring faraway locations into studios, virtual production has flashed a ton of promise. Studios don’t have to ship crews around the world. Reflections look real. Actors don’t have to pretend the green wall behind them is more than a green wall. Post-production departments can get a head start on their work.

But anyone who has actually filmed in virtual production will tell you the same thing Hitchcock, Kubrick, and all the classic "rear projection" artists would tell you:

It’s way too easy to make a virtual background look fake.

From Russia with Love, 1963

Why Do Virtual Backgrounds Look That Way?

The human brain is good at perceiving inauthenticity. Over millennia, human evolution developed a mechanism called “ensemble lifelikeness perception,” which automatically detects minor distortions in the world.

In more primitive times, these distortions indicated potential dangers. This is why we can detect faces that don’t quite look human (prehistorically indicating potential illness or injury—and nowadays, deepfakes). It's why we can spot false laughter and crying, bad acting, and other potential indications of, well, traps.

In virtual production, there are several common issues that trip the brain’s inauthenticity alarm:

- When the camera moves and the background doesn’t microscopically shift with the proper parallax that the real world does (our brain detects that the background is flat)

- When the background does shift with the proper parallax, but not in real time (the real world doesn’t have latency)

- When there’s a mismatch of perspective between the background and the physical foreground/actors/props (the real world is generally not askew)

- When there’s a mismatch of color balance between the virtual and the real

- When lighting in the foreground changes but doesn’t affect the background (our brains expect light to behave certain ways)

- When production lights reflect off of the plastic LED wall that is projecting the virtual background

- When the camera is too close to the virtual background, and can actually see the pixels generating the image

- When the camera is misaligned with the refresh rate of the background, and scan lines appear

- And of course, when an actor or object should be casting a shadow, but for some reason is not (see also: Why Does The Mandalorian Never Stand Next To Walls?)

These are only some of the more obvious ways a virtual set looks fake. The problem is, these challenges disappear when filming in a real location.

After all, in the real world, the rules of physics can be taken for granted.

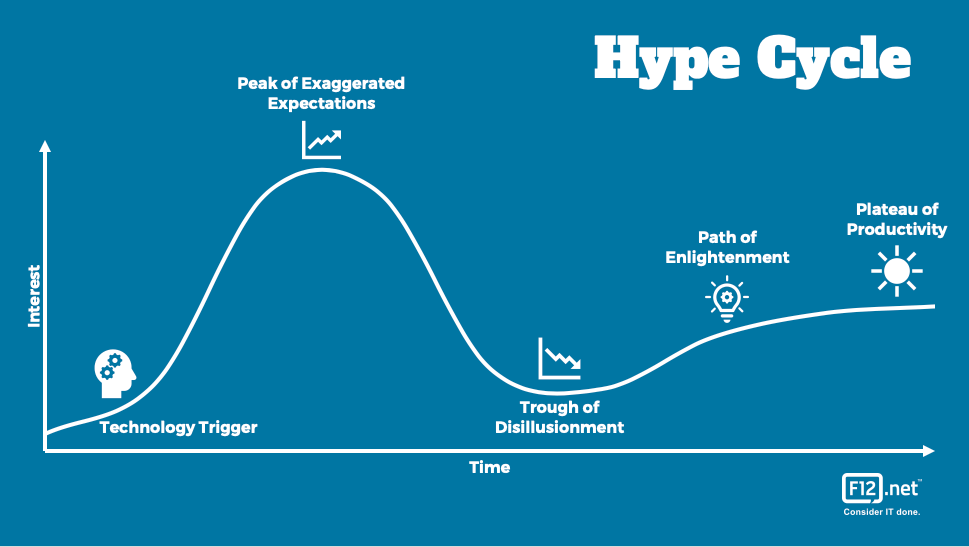

Every new field goes through a hype cycle. And these flaws are what threatens to push professionals curious about virtual production into the Trough of Disillusionment.

When you’ve spent your career framing gorgeous shots and telling compelling stories, it’s hard to be enthusiastic about the idea of making your shot look “not fake.”

Until this changes, virtual production technology will struggle to take off.

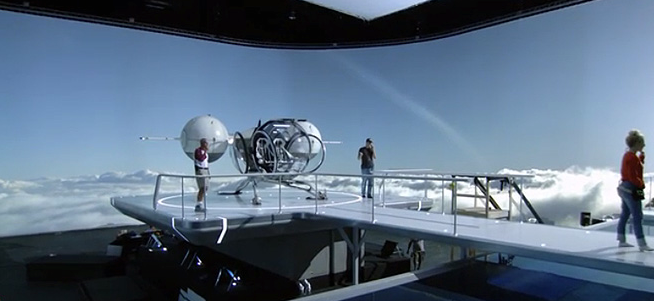

Oblivion made virtual production look real in 2013, through manual effort.

The Good News: Data Can Solve All Of These Problems—Automatically

Though our brains are wired to detect inauthenticity, science also shows that when the world lines up with our expectations, we unconsciously encode our observations as factual—even when we know something isn’t physically possible.

This is just a fancy way of saying, “our brains can be tricked into thinking Superman is actually flying.” This is the basis of every good magician’s show and all good special effects in movies.

Good filmmaking—and virtual production—nails this perceptual trickery. It satisfies our brains, so we simply don’t question whether the guy wearing Pedro Pascal’s helmet is actually in a Tunisian desert.

Right now, filmmakers solve the “fake factor” in virtual production through manual tweaks, guess-and-check, and writing around shots that will likely look fake with virtual backgrounds.

But ultimately, many virtual production challenges are about physics—which means we can use math to solve them. And unlike in math class, we can just let the computers do the work this time.

Virtual production already has a standard solution to the parallax problem: By sharing data between the camera and the background wall, we can automatically move backgrounds based on the position of the camera so the human eye perceives the motion as realistic.

.gif?width=640&height=360&name=SHOWRUNNER%20Software%20Walkthrough-low%20(1).gif)

We can apply this same kind of data-sharing automation to many of the other problems I mentioned.

.mp4-low.gif?width=640&height=360&name=BTS-Mikie-Running_1%20(1).mp4-low.gif)

For example:

- Data can be used to automatically color-balance an LED wall to match the IRL foreground elements, so both match in camera.

- Data can be used to auto-detect perspective problems, allowing a filmmaker to snap the LED background against the foreground perspective she wants.

- Data can be used to auto-sync the virtual and physical lights when you make changes or have actors move through a space. (This is something SHOWRUNNER automates, for example.)

- Computer vision data can be used to auto-detect moire and flicker and pixelation—and either warn the filmmaker or auto adjust settings to eliminate the issue.

- Similar computer vision data can be used to automatically cast a real actor's shadow into the virtual background.

-1.gif?width=640&height=360&name=SHOWRUNNER%20Software%20Update%20-%20February%202022-low%20(2)-1.gif)

-1.gif?width=640&height=360&name=SHOWRUNNER%20Software%20Update%20-%20February%202022-low%20(1)-1.gif)

I believe that the sharing of data between devices and apps is precisely what’ll bring virtual production into the mainstream.

And once film directors are free from making things look “not fake,” they can focus on the exciting part: using virtual production to do more than we can in the real world.

Shane Snow is the CEO of SHOWRUNNER.

-1.png?width=2539&height=346&name=Transparent%20(2)-1.png)